/

Foundations/

Why Data Quality Does Not Guarantee ETL Quality, And how CoeurData fills that Gap

Why Data Quality Does Not Guarantee ETL Quality, And how CoeurData fills that Gap

Modern platforms ensure data correctness, but they do not ensure that the pipeline is engineered with clarity, efficiency, consistency or maintainability.

CoeurData Editorial Team • 6 min read

Across many data engineering teams, a familiar belief continues to circulate. If the data is correct, then the ETL or ELT pipelines that produced it must also be correct.

This belief feels reasonable, but it is not accurate.

Modern Platforms and Their Capabilities

Modern platforms such as Snowflake, Informatica IDMC, Databricks, Azure Data Factory, AWS Glue, dbt, PowerCenter and DataStage have transformed how organizations integrate, manage and consume data. These platforms offer strong capabilities in data quality, governance, operational reliability and runtime performance.

Examples include Cloud Assurance and metadata intelligence in IDMC, Unity Catalog and Delta expectations in Databricks, Purview based lineage in Azure Data Factory, the Glue Data Catalog and data quality features in AWS Glue, data tests and documentation in dbt, and strong metadata and workflow governance in PowerCenter and DataStage. Snowflake also provides platform level governance and reliability features such as Horizon, masking rules, access policies and native performance optimization.

Yet none of these capabilities examine the detailed engineering choices inside the transformation logic that developers write. They ensure that the data is correct. They do not ensure that the pipeline is engineered with clarity, efficiency, consistency or maintainability.

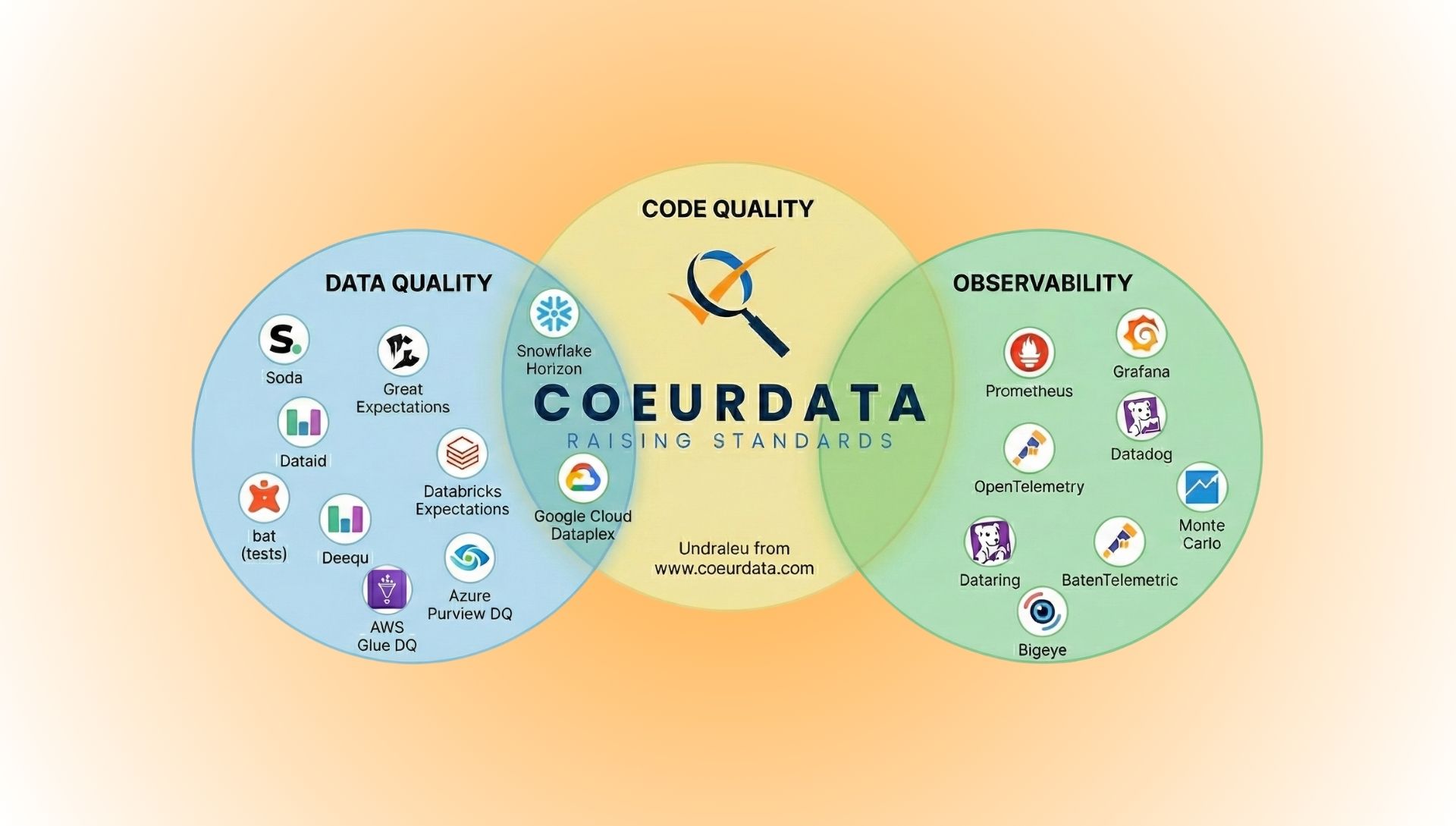

This distinction is the reason CoeurData created Undraleu, a platform dedicated to engineering quality across the modern data stack.

Data Quality and ETL Quality Are Not the Same Thing

A dataset can pass every data quality check even when the pipeline that produced it contains inefficient SQL, unnecessary complexity, unclear naming, non-reusable patterns or transformations that quietly increase compute costs in platforms such as Snowflake or Databricks. Data quality verifies the outcome. ETL quality verifies the engineering. One does not guarantee the other.

Why ETL and ELT Platforms Do Not Address Engineering Quality

Each major platform focuses on high order capabilities that are essential for success. Their mission is to ensure integration scale, runtime reliability, robust governance, metadata intelligence and performance. These are broad and strategic concerns.

However, transformation level engineering decisions live inside the logic that developers write, and these decisions vary significantly across organizations. Platform vendors do not attempt to govern this layer because it sits close to each client’s internal practices and engineering culture.

CoeurData built Undraleu to focus precisely on this engineering layer which is both important and underserved.

Why Data Engineering Service Providers Also Do Not Focus on Engineering Quality

Service providers concentrate on delivering business outcomes such as modernization, cloud migration, integration and performance improvements. Their work revolves around timelines, functional delivery and platform enablement. They rarely define or enforce engineering quality because every organization has its own standards, naming conventions, performance expectations and cost management practices.

Large delivery teams also work across many platforms at once. Without an automated system that examines the logic itself, it becomes difficult to maintain consistency among developers.

This creates a natural gap. Platforms govern operations and Service providers deliver functionality, but neither governs the engineering quality of the pipelines. Undraleu fills this gap.

Why CoeurData Created Undraleu

As organizations adopt Databricks, IDMC, ADF, Talend, AWS Glue, dbt and related technologies, the data engineering environment becomes broader and more diverse. The need for consistent engineering discipline increases sharply.

Undraleu does the following:

- Evaluates SQL, PySpark, Talend , Informatica mappings, ADF definitions, Glue scripts, dbt models and other forms of transformation logic.

- Allows organizations to express their engineering standards as rules.

- Enforces the standards across every data engineer and every piece of ETL code

- Identifies poor engineering patterns and highlights opportunities for improvement.

- Flags maintainability and complexity issues, which are often neglected by development teams.

- Provides early detection of engineering issues through CI and CD integration.

- Creates consistency across all platforms used by the organization.

The Future of Data Engineering Requires an Engineering Quality Layer

These capabilities sit outside the scope of both platform vendors and service providers, which is why Undraleu fills a critical need.

Good data does not automatically mean good engineering. As organizations operate across many platforms and clouds, engineering quality becomes essential to sustainability, efficiency and governance.

CoeurData believes that engineering quality should stand beside data quality as a foundation of the modern data stack.

Undraleu enables that vision. It improves consistency, reliability and cost efficiency across every platform an organization uses including Talend, Databricks, IDMC, ADF, Glue and dbt.

The software industry embraced static analysis many years ago. Data engineering is now ready for the same evolution.

Undraleu is CoeurData’s commitment to raising the standard of data engineering.